Large Language Models Could Influence Voter Attitudes in Elections

Post by Rebecca Glisson

The takeaway

Large language models (LLMs) such as ChatGPT can engage people in persuasive conversations that may change their opinions. When voters had conversations with an LLM about election candidates, they were more likely to change their political opinion on the candidate.

What's the science?

Large language models (LLMs) are used today as a way for the general public to quickly gather information about a topic they are unfamiliar with, despite how often these models can present misleading or false information as facts. There is a developing concern about how this will affect voter decisions in democratic elections. This week in Nature, Lin and colleagues studied how interacting with LLMs can change voter attitudes towards political candidates.

How did they do it?

The authors tested the effects of human-AI conversations on voter attitudes for four elections: a United States presidential election, a ballot election in Massachusetts for legalizing psychedelic drugs, a Canadian federal election, and a Polish presidential election in 2024 and 2025. They asked participants to rate their attitude toward each candidate or voting option and how likely they would be to vote on a scale of 0 to 100. Each participant would then have a “conversation” with an LLM which would try to persuade them for one candidate (or ballot measure), or the other. The authors used several different LLMs for the experiment, including the widely-known ChatGPT, but also DeepSeek, Llama, and a combination of models using Vegapunk. The model was instructed to have a positive and respectful conversation with the participant while working to increase the participant’s support for the model’s assigned candidate. After the experiment, participants were again asked to rank their support from 0 to 100 and the authors compared how their answers changed before and after the interaction with the LLM. Finally, the authors used a combination of Perplexity AI’s LLM and professional fact-checkers to study how accurate the statements in each of the LLM’s interactions with participants were.

What did they find?

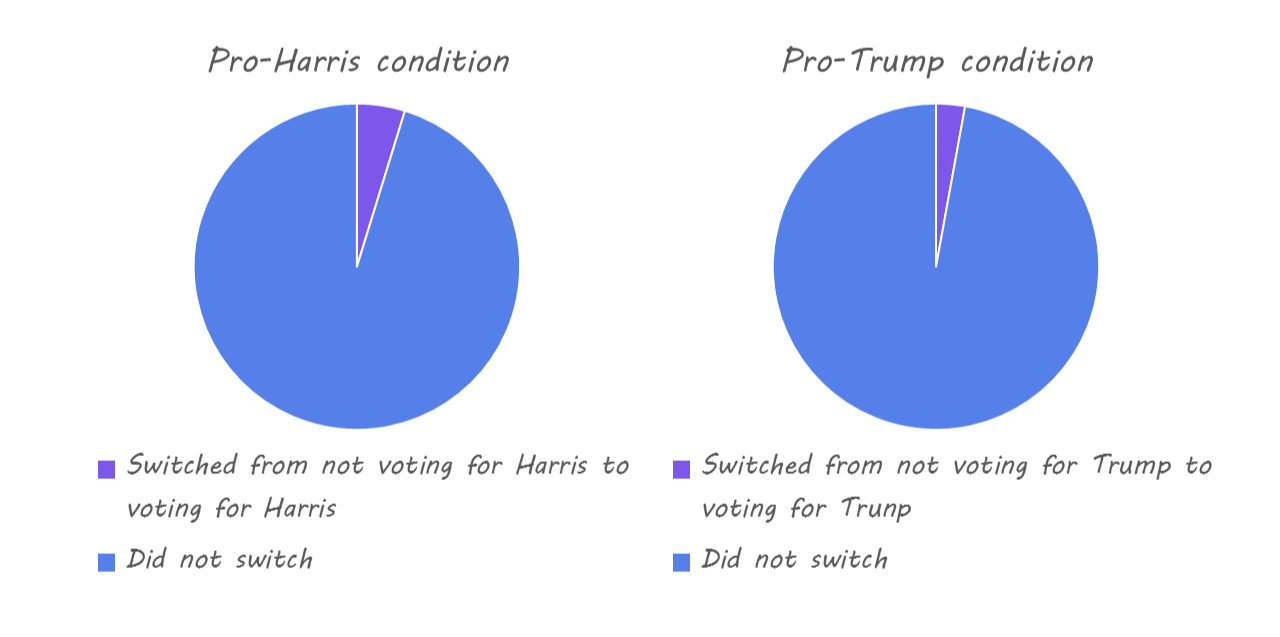

The authors found that for each group and election, participants were persuaded in whichever direction the model was assigned to work towards. This effect was even stronger if the participants interacted with an LLM that was advocating for the opposite of their initial preference. For example, if a person said they supported Trump for the US presidential election, and interacted with a pro-Harris LLM, they were more likely to lean towards Harris than someone who had initially been a Harris supporter. These same trends were true for the ballot measure in Massachusetts and the elections in Canada and Poland. The authors also found that overall, the statements made by the LLMs were mostly accurate. However, they found an interesting trend that LLMs that were arguing in support of the right-leaning candidates in each country made more inaccurate statements.

What's the impact?

This study is the first to show that LLMs, which have recently gained popularity among the population, can persuade people to change their opinions towards political elections and candidates. While some might consider this an exciting development in how to persuade voters in elections, the tendency of LLMs to produce misinformation is important to consider before trying to use them on a larger scale. Studies like these can be extremely valuable for understanding the risks of using new technology before it is properly understood and regulated.